Working Meeting of the Health Research Community: Report

July 13, 2016

Ottawa, Canada

Table of Contents

- Acknowledgements

- Background

- Meeting Objectives

- Participants

- Summary of Proceedings

- Opening remarks from CIHR

- Introduction from the Facilitator and Moderator

- 2016 Project Grant Competition: Lessons Learned from the Review Committee

- 2016 Project Grant Competition: Lessons Learned from CIHR

- Principles of Intent for High Quality Peer Review

- Indigenous Perspectives on Peer Review

- Concerns with the Current Peer Review System

- Identifying and Addressing Implementation Issues

- Final Outcomes

- Conclusion

- Additional Notes

- Appendix A – Meeting Agenda

- Appendix B – Participant List

Acknowledgements

On behalf of the Canadian Institutes of Health Research (CIHR), we would like to extend our appreciation to all those who took the time to participate in the CIHR Working Meeting on July 13, 2016. Your dedication, astute reflections and energy created a positive atmosphere that ultimately allowed for the group to arrive at pragmatic solutions to improve peer review processes at CIHR.

We would also like to thank Dr. Morag Park and Daniel Normandeau for their excellent facilitation of the meeting, which contributed in no small part to the overall success of the meeting.

Finally, we would like to thank the entire health research community for its commitment to ensuring the best possible research enterprise in Canada that relies on the efforts of many stakeholders from across the ecosystem, including peer reviewers, chairs, mentors and trainees, members from across Canada's diverse communities, patient groups, and institutions – just to name a few. Together, we can continue to build a more effective health research enterprise and continue to advance health outcomes for all Canadians.

Sincerely,

CIHR's Executive Management Committee

Background

In 2016, the Canadian Institutes of Health Research (CIHR) launched its first Project Grants competition as part of the major reforms to its Investigator-Initiated Research Program and peer review processes. These reforms were intended to contribute to a sustainable Canadian health research enterprise by supporting world-class researchers in the conduct of research and its translation across the full spectrum of health, and to ensure the reliability, consistency, fairness and efficiency of the competition and peer review processes. However, in practice, CIHR faced several challenges with the implementation of these changes, particularly with respect to the online peer review process.

In response to concerns associated with the peer review process for the 2016 Project competition, scientists across Canada voiced their concerns publicly and called on CIHR to make changes to its processes.

On July 5, 2016, the Minister of Health, the Honourable Jane Philpott, issued a statement noting the growing concerns from the health research community and asked CIHR to convene a working meeting with key representatives of the research community to find common ground and move forward with solutions that address the issues raised with regard to the quality and integrity of CIHR's peer review system.

CIHR convened this meeting on July 13, 2016 in Ottawa.

Meeting Objectives

The objectives of this meeting were to:

- To clarify and confirm the key issues raised by the health research community with regard to the quality and integrity of CIHR's peer review system;

- To find common ground and move forward with workable solutions that address the issues related to the peer review system raised by the health research community; and,

- To define a clear way forward.

A copy of the meeting agenda is available in Appendix A.

Participants

The meeting brought together members of Canada's health research community, including scientists, university administrators, the indigenous research community, senior government officials and representatives from CIHR. There was also a diverse cross-section of participants across career stages, pillars/disciplines, sex and region. A full list of participants is available in Appendix B.

Dr. Morag Park, Professor, Department of Biochemistry and Director, Rosalind and Morris Goodman Cancer Centre, acted as Chair and moderator for the meeting. Daniel Normandeau facilitated the meeting and helped to guide the discussion.

Summary of Proceedings

Opening remarks from CIHR

Dr. Alain Beaudet, President of CIHR, welcomed participants and highlighted his expectations for the day - to reach a common set of pragmatic solutions for improving the peer review of investigator-initiated grants that include solutions that can be implemented in the next round of competitions.

Dr. Beaudet took the opportunity to remind participants of how the reforms were established to alter the peer review system towards a personalized (application focused) evaluation with each proposal having a set of five experts. This was, in part a response to a 2010 IPSOS Reid poll of the community that confirmed that a majority of the community was indeed dissatisfied with the quality and consistency of peer review judgements. In addition, it was reported that CIHR was facing increasing difficulty in force fitting applications into standing committees.

He shared what he believed to be the theoretical advantages of a new online system: (1) ensure that all aspects of the applications – and particularly of interdisciplinary ones – would benefit from appropriate, world-wide expertise, while minimizing conflicts of interest; and, (2) allow CIHR to pursue excellence irrespective of the field of research.

In practice though, he noted that there were problems with the delivery of the new process, particularly in the first Project scheme pilot. There was a tipping point in the process that caused concern with the alarming increase in reports of poor quality online reviews and lack of appropriate online discussions.

He also noted his commitment to have a full external assessment of the quality of the more than 15,000 reviews that CIHR received in the course of the first Project scheme competition, and committed to make the results of this audit public.

In terms of outcomes of the day's discussion, a strong emphasis was made on developing a structured option that allows data collection so that the ultimate course of action may be evidence‑based.

He concluded by stating the need to rapidly find a compromise that will allow CIHR to move forward with a system that the community has confidence in, that also allows the equitable review of the most meritorious proposals across the entire spectrum of health research: from Pillar 1 to Pillar 4, from disciplinary to multi-disciplinary, from fundamental to applied.

Introduction from the Facilitator and Moderator

Mr. Normandeau shared the importance of having participants being able to share their views freely in a safe space towards working solutions at the end of the day. He noted the efforts to ensure participants at this meeting include a broad representation from pillar, area of expertise, and geographical representation. He also noted the participation of CIHR Senior Management, members of its Governing and Scientific Councils, U15 representatives, Indigenous health representatives, and young investigators. Finally, he acknowledged the presence of the Minister of Health's office, the Deputy Minister of Health Canada, and a representative from the Department of Innovation, Science and Economic Development working on the Federal Science Review Panel chaired by Dr. David Naylor.

The Moderator, Dr. Morag Park, explained why she agreed to support this exercise noting her commitment to the research community, the importance she attributes to having their confidence and the need to restore that confidence. She reiterated the goal of the day, which was to arrive at a consensus at the end of the day with recommendations for CIHR to run the next competition.

Dr. Park noted how the meeting was convened extremely rapidly and therefore could not accommodate everyone but the meeting consisted in a representation of the broad community. While some people will feel left out, the group had committed to share the full results of the meeting in detail with the community at large within the coming days.

Prior to the first presentation, the group discussed and rejected the proposed Chatham House Rule as a working parameter for the meeting.

2016 Project Grant Competition: Lessons Learned from the Review Committee

Dr. Shawn Aaron, who served as Chair of the Final Assessment Stage (FAS) committee for the Project competition, delivered a presentation on lessons learned from the inaugural Project Grant competition in which he offered some suggestions from the perspective of the committee. He gave an overview of the stage of the review process, noting that the FAS committee adjudicated 100 'grey zone' applications (i.e. applications that received disparate marks, or received three reviews or less during the first phase of review) during a face-to-face meeting in Ottawa in July 2016.

He noted that the Committee encountered significant difficulties with the review (mostly related to the inadequacy of the primary reviews, or lack thereof). He added that CIHR acknowledged that there were problems with the process and CIHR senior executives met with the committee to identify problems and solutions following the final review stage. He also made a point to recognize the tremendous efforts and hard work of CIHR staff throughout the process.

Dr. Aaron recommended that we take the best of the old system and keep the best of the new system to arrive at progress with respect to the peer review. He identified a number of shortcomings of the new system and offered some preliminary solutions to address these:

- Increase the length of the application to 7 pages

- Increase the space allocated to 'Approach and Methods' and give this section more weight in the scoring

- Allow applicants to include the full Common CV which lists all publications (he shared anecdotally that reviewers were going on PubMed to check for lists of publications anyway)

- Ensure appropriate reviewers with the right content expertise

- Allow chairs to assign reviewers, which will also help to increase reviewer accountability through personal connection with the chair

- Explore possibility of adding electronic check (for example, through a character count) that requires reviewers to write a minimum length of comments in their reviews

- Explore possibility of 'blind comments' that are not shared with applicants, but allow reviewer to flag, for example, if they lack expertise in a certain area

- Make it mandatory for CIHR grant holders to review, with exceptions given for extenuating circumstances. This would help increase the quality of review

- CIHR could consider allowing scientists who are applying to the same competition to also act as reviewers in the competition to help increase pool and quality of reviewers

- Need to increase reviewer engagement in order to improve quality of reviews

- Explore possibility of grouping reviewers and chairs into committee (similar to old structure) as this may help to increase quality and accountability

- Reinstate synchronous discussion, possibly through a digital teleconference

- Increase the size of the 'grey zone' so an increased number of grants are reviewed by the FAS committee.

- Allow the FAS committee to see the top-ranked grants so they can calibrate the 'grey zone' against the best grants that are going to be funded.

In the discussion period that followed Dr. Aaron's presentation, participants raised concerns about the lack of accountability regarding Conflict of Interest (COI) in the new system. While COI reporting will never be perfect, there could be an opportunity for improved management of COI by virtual chairs if they were given the responsibility of assigning reviewers.

Some participants raised the point that the new CIHR peer review system is dehumanized and fails to take into account human nature. They questioned the advantages of virtual review processes/discussions, aside from the reduced cost, which could mean more grants are ultimately funded.

Several participants expressed the view that with the new peer review process, early and mid-career investigators lose out on an important training/educational/mentoring aspect. In the old system of face-to-face peer review, there was increased opportunity for more junior investigators to learn how to write an excellent grant by observing or taking part in face-to-face panel discussions.

Individuals also noted that videoconferencing is not the same as in-person discussion, but that it could prove useful in certain cases (for example, to discuss outliers).

Participants asked whether it was theoretically possible for an individual to sink a grant and bias the system by giving a poor score and skewing variability downwards. CIHR confirmed that based on its modelling, this would be next to impossible.

Some participants agreed that one positive aspect about the new peer review system is that it allows for better review of interdisciplinary research, as compared to the old system.

Participants took issue with the criteria used in the current review system. They indicated that some sections were unclear or overlapping, and that there was an opportunity moving forward to merge the sections on quality and importance of the idea, as well as to reduce the combined weighting for these sections (which currently accounts for 50%).

2016 Project Grant Competition: Lessons Learned from CIHR

Dr. Jeff Latimer, Director General, CIHR, gave an overview of the Project Grant competition and shared some lessons learned from the perspective of CIHR.

In terms of application pressure, CIHR received 4,379 registrations in total for the Project competition, which was higher than anticipated. The actual number of applications submitted was 3,813, representing 3,037 unique Nominated Principal Investigators (NPIs) and a total request of $3.3 billion in funding.

CIHR encountered some difficulty in assigning reviewers for this competition. It originally contacted 9,000 potential reviewers, but only 2,329 agreed to participate. Each reviewer was to be assigned to 8-12 applications, while each application would have 4-5 reviews.

In addition, CIHR was dissatisfied with the results of the automated matching solution intended to match reviewers to applications. In assessing the reviewers' ability to review applications, many identified that they had low or poor ability to review grants. As a result, CIHR had to use a manual process to match reviewers to applications, which was done over the course of several weeks. This process delayed CIHR by approximately two months. In addition, 296 reviewers dropped out of the review process on short notice leaving CIHR little time to find alternate reviewers.

CIHR noted that if they are to use the automated matching solution going forward, significant improvements will need to be made. In the future, virtual chairs also need to be given more time to vet assignments and be comfortable with the quality of reviewers.

CIHR also needs to allow more time for reviewers to conduct reviews. Due to the compressed timeline for the Project Grant competition, and the need to re-assign applications after reviewers dropped out, some reviewers were given only two weeks to complete reviews this round; this is insufficient.

In total, 15,405 individual reviews were completed through the Project Grant competition and 2,898 online discussions were triggered (for about 76% of the applications; discussion was not expected for applications for which reviewers agreed).

One hundred 'grey' zone applications were sent to the FAS Committee for discussion. CIHR acknowledges that insufficient time was provided to the Committee to review the applications, and that there may be merit in moving forward a larger number of 'grey zone' applications for discussion at the Final Stage (for example, 400-500).

CIHR shared some results of the Project Grant competition that would be released on July 15, 2016:

- 491 Full applications will be funded (representing a 13% Success Rate)

- 468 Unique Nominated Principal Investigators (NPIs)

- 445 NPIs with a single grant; 23 NPIs with two grants

- 98 New Investigators

- 127 bridge grants to be funded (45 new investigators; 82 other NPIs)

- In total, the 2016 Project Grant Competition will fund 618 grants and 583 individual NPIs, including 143 New Investigators

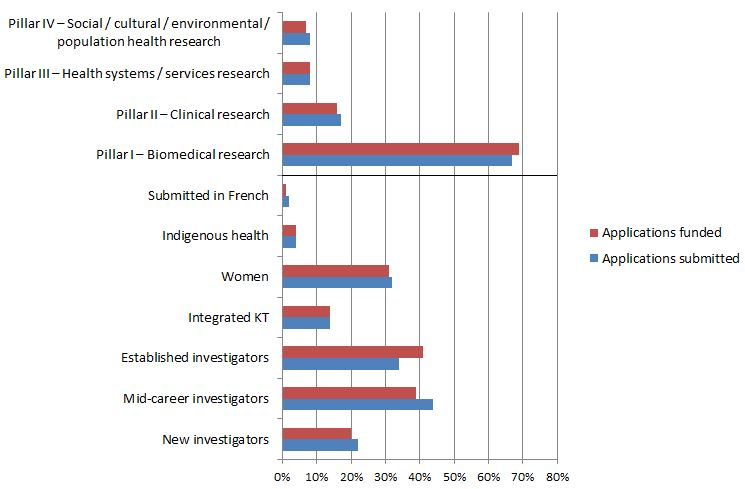

CIHR also shared some of the aggregated results of the Project competition by pillar, sex, indigenous, career stage, etc.:

Breakdown of competition results

Breakdown of competition results long description

| Application breakdown | Applications submitted | Applications funded |

|---|---|---|

| New investigators | 856 applications submitted (22%) | 98 applications funded (20%) |

| Mid-career investigators | 1,662 applications submitted (44%) | 189 applications funded (39%) |

| Established investigators | 1,283 applications submitted (34%) | 204 applications funded (41%) |

| Integrated KT | 539 applications submitted (14%) | 65 applications funded (14%) |

| Women | 1,228 applications submitted (32%) | 150 applications funded (31%) |

| Indigenous health | 147 applications submitted (4%) | 17 applications funded (4%) |

| Submitted in French | 83 applications submitted (2%) | 6 applications funded (1%) |

| Pillar I | 2,540 applications submitted (67%) | 345 applications funded (69%) |

| Pillar II | 666 applications submitted (17%) | 75 applications funded (16%) |

| Pillar III | 296 applications submitted (8%) | 36 applications funded (8%) |

| Pillar IV | 303 applications submitted (8%) | 35 applications funded (7%) |

Note: Details regarding the results of the Project and Foundation Grant competitions.

Based on lessons learned from the delivery of the first Project Grants competition, CIHR shared the following five suggested recommendations / areas for improvement:

- Appropriate time for all reviewers should be provided

- Matching solution improvements should be developed and tested

- Ability to Review categories should be improved and tested for application-focused review

- Virtual Chair workload should be “clustered” and expanded to vet reviewers

- Grey zone and number of committees should be increased

In the discussion period, CIHR noted that it would be doing a full analysis of the results of the Foundation and Project competitions combined, which can be compared against results from the former Open Operating Grants Program (OOGP).

With regard to reviewer attrition, one participant raised that some of the dropout is likely due to the compressed timeline imposed on reviewers.

Participants asked about the actual cost of conducting face-to-face peer review. CIHR confirmed that one face-to-face peer review committee meeting used to cost approximately $40K, which adds up to about $2 million per year to distribute a budget of roughly $500 million.

CIHR also mentioned that it is analyzing the quality of the reviews received through the Project competition. For example, CIHR staff has already done an initial assessment of robustness and appropriateness of all 15,405 reviews (for example, checking to see if the reviews had sufficient text and whether they were appropriate in general terms). CIHR noted that it has just issued a Request for Proposals (RFP) to do a full quality assessment of the reviews.

Discussion occurred on the pros and cons of standing review committees. One participant mentioned that CIHR used to have approximately 50 review panels, but that there were requests for many more panels and many applications didn't fit well in any committee. Given the suggestion to have more face-to-face meetings, the question was asked as to how CIHR would go about creating groupings or clusters given the vast and complex nature of health research. In earlier days, experts would be called in to committees to weigh in for multidisciplinary grants; however, it won't be as easy to simply pivot back to earlier, static groupings (e.g. respiratory, cardiovascular). We need to make sure that the new peer review structure is set up to deal with the hybridity of science and the changing landscape.

Participants raised concerns about the current ranking system and the difficulty of ranking applications that are vastly different. CIHR mentioned that the ranking system is the optimal method to ensure CIHR funds excellence as previously we used to set quotas for areas of science and thus not necessarily funding the very best research.

Participants agreed with CIHR's recommendation to increase the size of the 'grey zone'.

Participants asked CIHR about the difference between the automated matching solution and the manual scientific review to assign reviewers. CIHR noted that the manual matching is unsustainable for the size of the competition but that given the urgency; it was completed and did show an improved rate of matching.

Principles of Intent for High Quality Peer Review

Dr. Philip Sherman, Scientific Director for CIHR's Institute of Nutrition, Metabolism and Diabetes, provided a historical perspective of the reforms and within the context of the evolution of science. He began by noting his strong support for the reforms, illustrating the point of the past system and how it had worked well for some but not all. The IPSOS Reid Poll (2010) of researchers confirmed the general dissatisfaction with the system.

With the evolution of science and the increasing growth of interdisciplinary research, CIHR was experiencing increasing difficulty and witnessing more and more force-fitting applications into committees. This meant that it was becoming increasingly difficult to surround an application with the required expertise needed for an appropriate and equitable review.

The end result meant an increase in interdisciplinary applications being rejected by discipline-based peer-review committees with applications being traded across committees. This was dominating committee and staff time and energy.

The vision behind the reforms was to surround each application with the required expertise to effectively review. This meant having five reviews based upon the expertise needs of the application (e.g., methodology, population, knowledge translation) and a higher degree of agreement that will benefit the triaging process.

Dr. Sherman further noted that the old committee structure, which required setting uniform quotas or pools across committees, may have conflicted with the concept of excellence.

While acknowledging that the implementation of this new system was not perfect and important issues need to be addressed, Dr. Sherman stressed how we have in place the necessary tools to continue building an optimal peer review system without having to go back to the previous model.

Indigenous Perspectives on Peer Review

Drs. Rod McCormick (Thompson Rivers), Jeff Reading (Simon Fraser University) and Josée Lavoie (University of Manitoba) shared their views on Indigenous matters as they relate to peer review and the overall funding of Indigenous health research.

They stressed the importance of creating peer review processes that are appropriate for Indigenous health research and that address the needs of this unique research community. Currently, the Indigenous research community is not pleased with the CIHR Reforms thus far and does not think that they are fair or sustainable. The speaker mentioned that a working group involving members of the Indigenous research community and representatives from CIHR is currently working to establish peer review processes optimal for Indigenous health research.

They voiced concerns with the low levels of funding for Indigenous health research through the Investigator-Initiated Research Program, as demonstrated, for example, by the low proportion of Indigenous health research grants funded through CIHR's inaugural Foundation Grants competition (only one third of one per cent of the total Foundation grants budget). Given that Indigenous health is a major issue, associated with huge health and health care costs in Canada, they stressed the real need to invest in research to address Indigenous health - and to make sure that peer review processes allow for the support of such research.

Further, they noted that supporting Indigenous health research goes beyond indicating with a tick box the relevance of the work to Indigenous Peoples' health, and that it needs to ensure that projects are addressing the breadth of multidisciplinarity required for Indigenous health research.

It was noted that CIHR has committed to making Indigenous health research a priority, and that members of the Indigenous health research community will be meeting with CIHR's Governing Council at their retreat this summer, which will take place at the Wendake reserve in Quebec.

Dr. Lavoie discussed Canada's role as an international leader in Indigenous research and the need to maintain this status moving forward. She also spoke to the importance of Chapter 9 of the Tri-Council Policy Statement: Ethical Conduct for Research Involving Humans which focuses on guidelines for research involving First Nations, Inuit and Métis Peoples. These guidelines require that researchers engage in meaningful and transformative partnerships in a way that, in turn, increases the ability of communities to address health issues in their own way and independently. In terms of peer review, she noted that international reviewers often have no concept of Canada's Chapter 9 guidelines, which can inadvertently hinder Indigenous health research applicants. The special considerations and conditions surrounding Indigenous research should be taken into account when developing solutions and approaches for peer review.

Concerns with the Current Peer Review System

In addition to the concerns raised in the earlier part of the morning and during the Q&A sessions, participants of the Working Meeting were given the opportunity to share additional concerns with CIHR's peer review processes. The following summarizes some of the key issues raised by the members of the group.

Rating, ranking and review criteria

Many participants voiced concerns about the current rating and ranking system being used by CIHR. Participants explained that it was difficult to have confidence in the system when scientists are not clear on how it works. CIHR was asked to explain how the normalization algorithm, which is used to arrive at the final consolidated ranking, actually works. Dr. Latimer summarized the approach in detail.

Participants also shared that the current rating system for sections of the application is overly confusing and suggested that CIHR replace the current alphabetical categories (i.e. O++, E+, E ,G, F, P) with a simpler numerical rating scale (e.g. 1-10).

One participant noted that the new system is designed for an absolute scoring system, yet we use relative scoring with the rank system. With a small sample size of grants to review, it might be difficult for reviewers to adequately rank applications. Dr. Latimer outlined how the approach is operationalized.

Several participants identified that the current weighting system is not appropriate for all types of applications. For example, certain application criteria/categories may be less relevant based on the type of research being undertaken.

Reviewer accountability

Although many of the problems with the recent Project competition peer review were due to technical issues in the new system, participants noted that there is also a responsibility on the scientific community to carry out peer review duties and give back to the system. They agreed that, to some extent, the poor quality of reviews in the last round is the fault of the community. One participant noted that we can design the best system in the world, but it will only work if reviewers step up. Several participants noted that peer review should be mandatory for CIHR grant holders. There is an opportunity to explore other types of accountability mechanisms or incentives to promote quality peer review.

Educational aspect of peer review

Participants emphasized the educational value of peer review in providing constructive feedback, and thereby helping junior investigators to develop grant-writing skills and improve scientific efforts. For example, detailed notes to the applicant from the Scientific Officer (SO) provided guidance to the applicant for the next round. Yet, in the current system, these valuable notes are not provided. This was identified as being a tremendous loss of educational opportunity.

There was also some discussion about the role of peer review as an educational tool, whether that is an underlying function of peer review, and whether this is intended as a core tenet of the system.

Peer review panels and addressing multidisciplinary grants

There was some discussion within the group about the value of bringing back the old peer review panels or committee structure and how this would impact multidisciplinary research. Overall, participants tended to agree that a return to some kind of panel structure would be preferred as they would be positioned to retain some corporate memory. However, the old system of static panels was not well-equipped to respond to the needs of multidisciplinary research or the rapid evolution of certain areas of science. Moving forward a new panel structure would require some degree of flexibility to allow for it to meet the needs of the changing scientific landscape. Participants shared that the old panel structure worked very well for some areas of science. In this case, we could consider keeping some of the elements that worked well, but adding new elements that can respond to multidisciplinary research.

Identifying and Addressing Implementation Issues

Participants broke up into groups and discussed a number of key themes or components that had been raised throughout the day. The following describes some of the suggestions proposed by participants to address each of these themes. It was noted that many of the ideas shared were largely reflective of the recommendations in the letter to CIHR from the University Delegates and in the summary provided by the Project Grants FAS committee.

Selection of reviewers

For the selection of the reviewers, there was general agreement amongst participants for CIHR to trust the expertise and knowledge of the virtual chairs to help select and validate the reviewers. This could be carried out with support from the Scientific Officer and CIHR staff. It was suggested that CIHR Institutes (and specifically their Scientific Directors) might be able to assist with the assignment of applications to clusters/panels and assignment of reviewers to applications; however, some also noted that certain Institutes would be overloaded and in some cases would not be well-positioned to carry out this task effectively.

Many also agreed that applicants should be given the flexibility to identify the type of reviewers or competencies required to effectively review their application.

It was also emphasized that to effectively complete the reviews, reviewers must be given sufficient time. Once again, participants also noted the value of synchronous review.

Engagement of reviewers

An overarching theme heard throughout the groups was the need for expanded face-to-face meetings for a variety of reasons including mentorship; the learning experience for young investigators; and the networking opportunities. Participants highlighted the fact that the vast majority of reviewers want to be engaged and are interested in participating in the process.

Participants also discussed the importance of face-to-face meetings in making reviewers accountable to their peers and thereby helping to ensure quality reviews. One suggestion made was to somehow rate reviewers in order to ensure better quality.

In order to re-establish confidence in the community, participants also debated the merit of having incentives to encourage reviewers, although some felt that these incentives might only be attractive for younger, non-tenured researchers and would be less appealing among senior scientists. Other participants recommended that participation in peer review be made mandatory for current and recent grant holders.

Mentorship and feedback

There was unanimous agreement amongst participants on the value of the Scientific Officer notes to allow the unsuccessful applicant to improve their grant proposal for subsequent rounds of funding. Participants also highlighted the desire for meaningful feedback (beyond identification of weaknesses and strengths) in the form of narrative reviews and a desire to ensure accountability. The idea of timing was also stressed as an important aspect in relation to feedback, i.e., that CIHR should provide reviews as quickly as feasible to unsuccessful applicants to allow them the necessary time to prepare revised applications. To improve accountability among reviewers, participants stressed the need for CIHR to provide quality checks that will ensure meaningful participation in the review process.

The recommendation to allow trainees and junior scientists to observe face-to-face review meetings was also generally endorsed as a strong mechanism that allows for training and mentorship. To ensure this opportunity is seized, some participants suggested that institutions may be willing to provide funding to support participation of observers in face-to-face peer review meetings.

Panels, pools, breadth of mandate, and how to serve multi/interdisciplinary research

The majority of participants agreed that returning to the large number of review panels of the past was not the preferred approach but there is a better way to build 'clustered panels'. Some suggested that CIHR's Scientific Directors could assist in this exercise and that the clusters could potentially duplicate the structure of CIHR with 13 panels. It was noted, however, that given the diverse number of applications per institute mandate, that some institutes would have an increased number of clusters. It was also noted that the CIHR Institute structure would not cover all areas of science nor would it adequately respond to the issue of multidisciplinary research.

For phase 1 review, some suggested that discussions could take place synchronously via WebEx, Skype or teleconference. However, the challenge of doing this in large volume competitions was noted.

While there was some variance on the exact number, groups reported that between 4 and 5 reviewers per application would be needed, with 120 applications per grouping and with a triage of 40-50% of applications going to the next phase. Another suggestion was, in addition to the panel/cluster reviewer membership, to have a subset of reviewers that are floaters, who bring a specific expertise and can review across multiple groupings (e.g. statistician). This would help to ensure that the reviewers have the appropriate complement of expertise for applications, particularly those that are multidisciplinary.

Application forms and scoring

General agreement was reached on a number of fronts in relation to application forms and scoring. These included, for example, strong support for revisiting the rating process with the suggestion to go back to a simpler numeric rating system. There was also consensus on the need for greater flexibility in application forms and increased length. Many participants suggested increasing the page length from 7 to 10, with unlimited room for letters of support.

Indigenous research

Several participants underscored the need for ring-fenced funding for Indigenous health. This also included the need for a separate Indigenous review panel.

It was also reported that more focus on cultural needs should be considered in the peer review process. For example, community engagement is not easily adaptable to current processes and timelines for review. Participants also expressed concerns with having international reviewers as they may not have the necessary knowledge of Chapter 9 of the Tri-Council Policy Statement: Ethical Conduct for Research Involving Humans, which relates to research involving Indigenous Peoples, and its applications in a Canadian setting.

Additional comments from the plenary

As groups reported back to the plenary, other recommendations were also presented and debated. Of note, there was general consensus on a number of suggested changes that include for example the capping of two applications per competition for individual NPIs. Participants also confirmed their support for a synchronous review; a commitment to having a minimum of 4 to 5 reviewers; and, an increased percentage of grants going to second stage review. Morag Park provided a detailed overview of the general consensus heard throughout the session that was further articulated and reflected in the final outcomes.

Final Outcomes

The following are the solutions that were proposed, and agreed upon, by members of the Working Meeting. These changes received approval from CIHR's Governing Council following an assessment of the organizational feasibility of the recommendations.

Applications

- Applicants will be permitted to submit a maximum of two applications to each Project Grant competition.

- The existing page limits for applications will be expanded to 10 pages (including figures and tables) and applicants will be able to attach additional unlimited supporting material, such as references and letters of support.

Stage 1: Triage

- Virtual Chairs will now be paired with Scientific Officers to collaboratively manage a cluster of applications and assist CIHR with ensuring high quality reviewers are assigned to all applications.

- Each application will receive 4-5 reviews at Stage 1.

- Applicants can now be reviewers at Stage 1 of the competition. However, they cannot participate in the cluster of applications containing their own application.

- Asynchronous online discussion will be eliminated from the Stage 1 process.

- CIHR will revert to a numeric scoring system (rather than the current alpha scoring system) to aid in ranking of applications for the Project Grant competition.

Stage 2: Face-to-Face Discussion

- Approximately 40% of applications reviewed at Stage 1 will move on to Stage 2 for a face-to-face review in Ottawa.

- Stage 2 will include highly ranked applications and those with large scoring discrepancies.

- Virtual Chairs will work with CIHR to regroup and build dynamic panels, based on the content of applications advancing to Stage 2.

- Applications moving to the Stage 2 face-to-face discussion will be reviewed by three panel members. A ranking process across face-to-face committees will be developed to ensure the highest quality applications will continue to be funded.

Members of the Indigenous community in attendance at the Working Group meeting endorsed the principles and structure as developed by the Working Group with the proviso that there be a complementary iterative peer review process for proposals with an Indigenous focus for the next and future Investigator-Initiated Research Program competitions. Further it is understood that all research with an Indigenous focus adjudicated by CIHR Peer Review processes will be in compliance with TCPS Chapter 9.

Conclusion

CIHR is committed to implementing these changes starting with the next round of Project and Foundation Grant competitions in 2016. It was agreed that a Peer Review Working Group will be established under the leadership of the College of Reviewers' Executive Chair along with representative participants drawn from those who attended the Working Group meeting to advise CIHR in the implementation of these changes.

This Peer Review Working Group will also explore options related to adjudication criteria appropriate for ensuring equity across different career stages of applicants, including early-career investigators (ECIs) and mid-career investigators (MCIs). One suggestion to be further explored is to adjust baseline success rates by + 5% for ECIs and MCIs.

CIHR will continue to work with the Indigenous research community, including the existing CIHR Appropriate Review Practices Reference Group (Peer Review) on Indigenous Health Research and the Aboriginal Health Research Steering Committee (AHRSC) to find ways to address concerns raised by the community with respect to peer review processes and other issues facing the Indigenous health research community. As part of this ongoing dialogue, CIHR committed to engaging with its Governing Council to explore the possibility of ring-fenced funds in the Investigator-Initiated Research Program for Indigenous health research projects.

Additional Notes

On July 13, 2016, CIHR released a communiqué outlining the outcomes from the Working Meeting. The Minister of Health also issued a statement acknowledging the results of the meeting.

A draft version of this report was shared with participants of the Working Meeting on July 18, 2016, as agreed upon in the Working Meeting Terms of Reference.

On July 22, 2016, CIHR published details of the final outcomes from the Working Meeting to its website, as well as details regarding the establishment of the Peer Review Working Group.

Appendix A – Meeting Agenda

Working Meeting of the Health Research Community

July 13, 2016 from 08:30 to 16:30

Location: Sheraton Hotel – Rideau Room, 2nd floor, 150 Albert Street, Ottawa, ON

| 08:30 | Welcoming Remarks and Introductions |

| 08:45 | Review of the Agenda

|

| 09:15 | Shared Lessons Learned from the Project Grant Competition

|

| 09:45 | Views from the Community

|

| 10:30 | Health Break |

| 11:00 | The Principles of Intent for High Quality Peer-Review

|

| 11:30 | Table Discussions: Developing Workable Solutions

|

| 12:30 | Working Lunch |

| 13:15 | Tables Reporting Back - Plenary Discussion |

| 15:00 | Health Break |

| 15:15 | Identifying and addressing implementation issues |

| 16:00 | Key Messages to Communicate |

| 16:30 | Next Steps |

| Closing Remarks |

Appendix B – Participant List

- Morag Park

Moderator

Director, Rosalind and Morris Goodman Cancer Research Centre

McGill University - Daniel Normandeau

Facilitator - Shawn Aaron

University of Ottawa - Carolina Alfieri

Centre de recherche, CHU Sainte-Justine - Brenda Andrews

University of Toronto - Jane Aubin

Canadian Institutes of Health Research - Meghan Azad

University of Manitoba - Kristin Baetz

University of Ottawa and Canadian Society for Molecular Biosciences - Robert L. Baker

McMaster University - Christian Baron

Université de Montréal - Alain Beaudet

Canadian Institutes of Health Research - Lara Boyd

University of British Columbia - John Capone

University of Waterloo - André Carpentier

Université de Sherbrooke - David Clements

Office of the Minister of Health (observer) - Kristin Connor

Carleton University - Shelley Doucet

University of New Brunswick—Saint John and Dalhousie Medicine New Brunswick - Michelle Driedger

University of Manitoba - Aled Edwards

University of Toronto - John T. Fisher

Queen's University - Lucie Germain

Laval University - Alain Gratton

McGill University and Douglas Hospital Research Centre - Eva Grunfeld

Ontario Institute for Cancer Research and University of Toronto - David Hill

Lawson Health Research Institute

St. Joseph's Health Care - Sarah C. Hughes

University of Alberta - Digvir Jayas

University of Manitoba - Joy Johnson

Simon Fraser University - Simon Kennedy

Health Canada (observer) - Nadine Kolas

Department of Innovation, Science and Economic Development (observer) - Paul Kubes

University of Calgary - Jeff Latimer

Canadian Institutes of Health Research - Josée Lavoie

University of Manitoba - Scot Leary

University of Saskatchewan - Emily Gard Marshall

Dalhousie University - Jean-Yves Masson

Laval University - Roderick McCormick

Thompson Rivers University - Jennifer McGrath

Concordia University - Rod McInnes

Lady Davis Institute for Medical Research and McGill University - Robert McMaster

Vancouver Coastal Health Research Institute - Mona Nemer

University of Ottawa - Peter Nickerson

University of Manitoba - Michael Owen

University of Ontario Institute of Technology - Vassilios Papadopoulos

The Research Institute of McGill University Health Centre - Michel Perron

Canadian Institutes of Health Research - Caroline Pitfield

Office of the Minister of Health (observer) - Jeff Reading

Simon Fraser University - David Rose

University of Waterloo - Brian Rowe

University of Alberta

Scientific Director, CIHR Institute of Circulatory and Respiratory Health - Thérèse Roy

Canadian Institutes of Health Research - Jane Rylett

Western University - Phil Sherman

The Hospital for Sick Children

Scientific Director, CIHR Institute of Nutrition, Metabolism and Diabetes - Arjumand Siddiqi

University of Toronto - Steven P. Smith

Queen's University - Michael Strong

Western University - Bill Tholl

HealthCareCAN - Kelly VanKoughnet

Canadian Institutes of Health Research - Sam Weiss

University of Calgary - Lori West (CIHR Governing Council member)

University of Alberta - Holly Witteman

Laval University - Jim Woodgett

Lunenfeld-Tanenbaum Research Institute - Terry-Lynn Young (CIHR Governing Council member)

Memorial University of Newfoundland

- Date modified: